Generalized Linear Model

Linear Model

A linear model assumes the data generation process is

And the error can be measurement error, or covariates other than those in that can affect . Since is not random variable, we can just add it up to the expectation. Since this expectation depends on the value of , it’s more proper to write . And there’re some common choice to use to emphasize it’s a random variable. With this normal distribution assumption on the error, we have:

Generalized Linear Model

Let

be the mean function of y. The word mean function means that this function spits out the mean value of (nothing special in fact). The regular linear model is:

The generalized one is we cover the mean function with a continuous real-valued link function s.t.

This is saying in generalized linear model, the dependent variable that we’re modeling, is not directly connected with the independent variable/predictor , but restricted through a link function . Forwardly, is taking a random variable (distribution) that may only certain ranges output it into real value. If only has positive values, we need to map it into real ones because that’s how we model it by . Inversely, the better way to think this is we have that restricts the input to certain range like only positive values. But not all continuous work (I’m not sure work or good), we need continuous and increasing (?). In general, we want the distribution we are working on to be in the exponential family.

Exponential Family

Intuitively, an exponential family is a family of distribution that restricts the interaction between the random variable and the parameter of its pdf model into only a multiplication in the exponential of . Most common distributions we’ve seen: Bernoulli, Binomial, Poisson, Gaussian, Gamma, Beta and so on.

A family of distribution is said to be a k-th parameter exponential family on (since ) if there exists

- A vector of of

- A vector of of , that make sure x has same dimension with the parameter

is a normalizing factor so it doesn’t matter if we put it inside or outside the exponential. is a change of measure, depending our choice, it will often be 1.

Two Parameter Gaussian

A two-parameter gaussian, the regular one, as we’ve said is a member of the exponential family. Let’s check it out. Let , then ,

Thus

Shorter is

We can move outside, let it be a constant function of , but it doesn’t matter.

Canonical Exponential Family

What’s better is that we can reduce the exponential family to have the random variable and the parameter to interact directly. And we have only one unknown parameter.

The is the dispersion parameter that's always assumed known. And we assume it’s always non-negative.

One Parameter Gaussian

If we assume we’ve known the variance, Gaussian can be written in the above canonical form. Let

Therefore

Likelihood Property and First two moments

Two important likelihood properties for finding expectation and variance for canonical family are: (WLOG, assuming we have only scalar $x$, let be the log probability function)

Both of which are obtained from the notes in the section of fisher information.

These can bring us, with as the canonical exponential family, .

And

This implies that needs to be strictly convex since we need its second derivative non-negative(? Probably dispersion is never negative).

Poisson distribution

With these tools we can get Poisson faster. Let , let

Therefore , , and .

Link Function

For LM, the link function .

For poisson data, where we assume that , we need a link function that can constrain the a real-valued , into only positive numbers . A log function will be a natural one. The other way to see this is we constrain the the input to only output a positive value (technically non-negative).

For binary outcome data, we typically use logit. But there’re others. In fact, the inverse cdf of all probability distribution can map binary outcome data into real values. But there’re benefits that we choose the “correct” one.

Canonical Link

Canonical link arises naturally from the distribution itself. The link that

connects to the exponential family parameter (it means the parameter is in the form of exponential family like for Poisson ) is the canonical link. Canonical link is good, since we’re directly modeling one parameter of the distribution. And

is strictly increasing (idk why this is good). Let’s take Bernoulli as an example.

Bernoulli distribution

The cdf of Bernoulli:

In order to make canonical family true, need to let $\theta=\displaystyle\ln\frac{p}{1-p}$, then $p=\displaystyle\frac{e^\theta}{1+e^\theta} $. And $\ln(1-p)=\displaystyle \ln(\frac{1}{1+e^\theta})=-\ln(1+e^\theta)$. This leads up to:

This tell us . Then

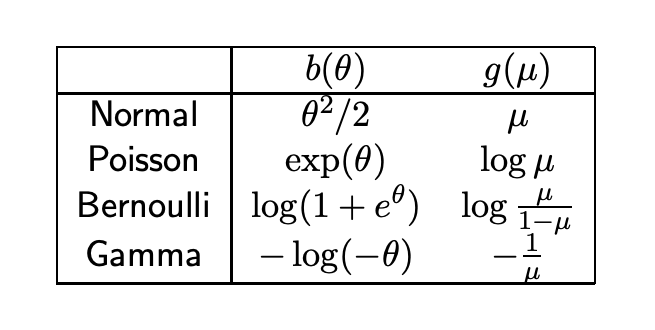

And certainly we have a table for this

MLE

With all these, we start to be interested in given log likelihood, how to compute the derivative of it with respect to the weight parameter $\displaystyle \frac{\partial \ell}{\partial\boldsymbol\beta}$.

With data

Assume we have data $\mathcal D=(\mathbf x_1,y),…,(\mathbf x_n,y_n)$, and compose all the independent variables into , and .

From $\theta$ to $\beta$

Given the data, I can start using $Y$ as the random variable s.t. $p_\theta(y)=Pr(Y=y;\theta)$. This aligns with the assumption at the beginning that in GLM, the dependent variable $Y$ is in exponential family. Therefore we have

as the [probability density function] and at the same time the log likelihood function of a single $y$. In the section of likelihood property, we have $b’(\theta)=\mu(\mathbf x)$. And from as the fundamental assumption of GLM, we have $g(\mu(\mathbf x))=\mathbf x^\top\boldsymbol\beta$. Then:

We have . If we have canonical link, , according to (9) in the canonical link section.

Since we’re dealing with data, it’s more appropriate to label $\mathbf x^\top\boldsymbol\beta$ as $\mathbf x_i^\top\boldsymbol\beta$. Think that we’re on the processing of infering the correct $\boldsymbol\beta$. This leads to $\theta$ is also per $\mathbf x_i$:

And therefore

Log likelihood

Then we can express the log likelihood as

If we have canonical link, $h(·)$ is identity:

Taking the derivative w.r.t. $\beta$ we can eliminate . What’s left it’s a constant , and two terms related to . The log likelihood does not have analytical solution. So we care about if the function is concave (or negative convex) so we have a theoretical guarantee a sequential approximation method can converge. W.r.t $\beta$ , $y_i\mathbf x_i^\top\boldsymbol\beta$ is linear. What about $b(·)$? We already know that $b’’(\theta)\phi=\mathbb V(x)$. Thus as long as $\phi>0$, $b$ will be strictly convex. Then a negative convex function will lead to concave. So the whole function $\ell(\beta,\mathcal D)$ is concave.

Methods for $\boldsymbol\beta$

Newton-Raphson and Fisher-scoring

Newton methods can be used to approximate $x$ values in a function $f(x)$. Linear approximation (Euler’s Method) is approximating the $y$ value by derivative at a starting point (and iteratively next points and so on). Newton methods use derivative as well but the reciprocal so that we’re moving $x$. Specifically, if we want to find a point s.t. , then

Similarly, if we want to find a point s.t. the derivative of its log likelihood is zero , we just use the second derivative:

These can be done a lot of times getting $\theta_1,\theta_2,…$ and etc. to get a better approximation. Generalized to the multivariate case , the thing in the denominator is the Hessian matrix $H_\ell$, and we need vectors and so on:

Now the Fisher scoring comes to play. Remember the Fisher information is also the negative expectation of second derivative of log probability (likelihood):

Since the Fisher matrix and its inverse is easier to compute (because it’s a vector of gradient times a vector of gradient), we can replace the Hessian with Fisher:

(note a sign change) Notice that the Fisher matrix requires the parameter to be “true” , here we don’t. In fact, if we have canonical link, the Fisher matrix is always equal to the Hessian matrix (negative).

Iterated Re-weighted Least Square (IRLS)

Let’s finish what we didn’t finish in e.q. (13), not (14) because it’s a pain, take the derivative:

By letting , , and $\displaystyle W_i=\frac{h’\left(\mathbf{x}^{\top }_{i}\boldsymbol{\beta }\right)}{g’( \mu _{i}) \phi }$. Let’s rewrite this with matrix. Let $W=diag(W_i)$, then:

Though look complicated, writing in this way helps us prepare for the second derivative. Since second derivative it’s a matrix not a gradient vector. Let’s step back to do a scalar one:

And then note that

Also note that, since we’re eventually taking the expectation, $\mathbb{E}[ y_{i} -\mu _{i}]=0$ because the expectation is w.r.t. the likelihood (see note), the leads to the left term is equal to the right term which is $\mathbb E[Y_i\vert\mathbf x_i]$. Then

And therefore,

Now let’s try to expand the Fisher information update similar to e.q. (16):

The right hand side is pretty much similar to the solution to ordinary least square, which is $\hat{\beta } =\left(\mathbf{X}^{\top }\mathbf{X}\right)^{-1}\mathbf{X^{\top } y}$. Let $\mathbf y=\tilde{\mathbf y}-\tilde\mu$, we’re just adding a covariance matrix to the solution.

Weighted Least Square

Weighted least square can be many forms and more broadly used in other cases. In this setting, weighted least square is weighted in the case that we do not assume the data is generated homoscedastically, that is they have different variance (same zero mean still). Then we assume we have a diagonal (not have to be) matrix $W$ and $\epsilon\sim\mathcal N(0,\sigma^2W^{-1})$. To adjust the different variance across $\epsilon_i$, $Y_i\vert\mathbf x_i\sim\mathcal N(\mathbf x^\top\boldsymbol\beta,\sigma^2W^{-1})$ and:

And when we’re performing $\arg\min_\beta \prod \log p(y_i\vert\mathbf x_i)$, we can modify the exponential part a little bit:

And resemble $\hat{\beta } =\left(\mathbf{X}^{\top }\mathbf{X}\right)^{-1}\mathbf{X^{\top } y}$, the solution will become:

Therefore, let

And update

In general, each time we need to compute $\mathbf x^\top\boldsymbol\beta^{(t)}$, and feed this into $h’(·)$, $(g’\circ g^{-1})(·)$ like in e.q. (18) to obtain $W^{(t)}$, $\tilde{\mathbf y}^{(t)}$, and $\tilde\mu^{(t)}$ then obtain get $s^{(t)}$ and update the $\beta$ until convergence.